Coverage of artificial intelligence has reached fever pitch and now one academic suggests it might become a “duty” for boards to use AI in their decision making.

The conclusion comes from legal scholar Floris Mertens, based at the University of Ghent, after surveying legal systems around the world. Mertens’ conclusion comes as part of an intense background discussion of how AI, or “robots”, could fit into boardroom deliberations.

“Considering that the analytical capabilities of AI may be superior to those of humans for a number of specific tasks, the ubiquitous expectation for directors to act on a well-informed basis may very well evolve into the duty to rely on the output of AI,” writes Mertens.

The “duty” speculation comes at a time when AI appears to be making headlines nearly every day. This week the law firm Allen & Overy said it was introducing AI to write contracts and letters to clients; AI has been used to voice a song by headlining DJ David Guetta, the chancellor Jeremy Hunt used ChatGPT, AI software, to compose part of a recent speech. The world was impressed.

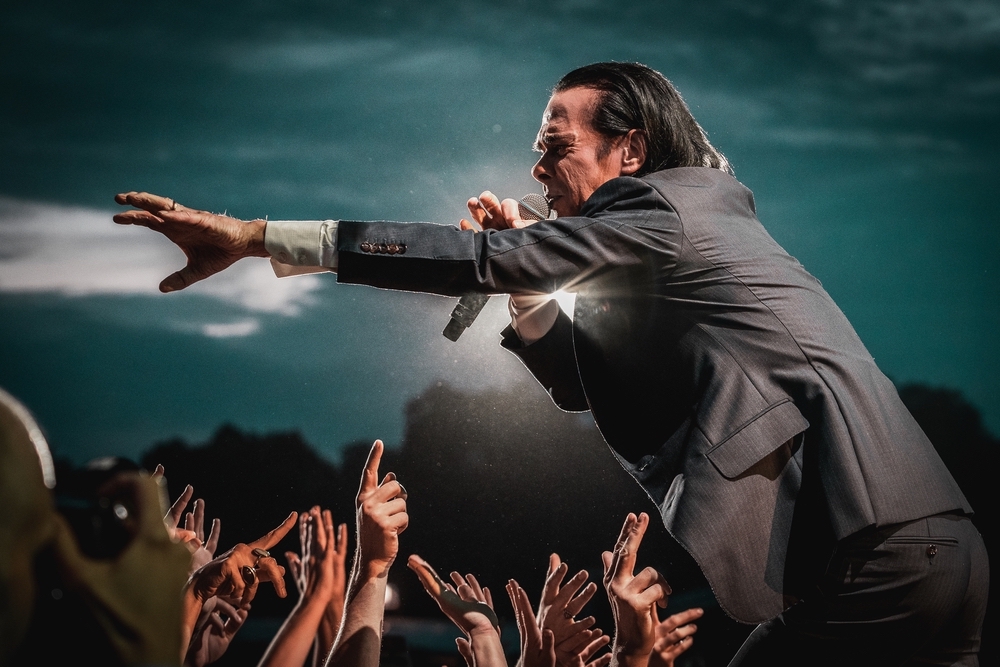

Though not everyone. Stocks in Google fell after its AI chatbot produced incorrect information, and musician and artist Nick Cave described lyrics, drafted by ChatGPT after his own style, as a “grotesque mockery of what it is to be human”.

Mertens is far from considering AI “grotesque” but he does believe the chances of AI becoming a full-blown boardroom decision maker are slim given most legal systems require directors to be “natural” persons and not an endless stream of code flashing around a processor.

Some, however, see the merits of robots taking over as directors. According to some observers, AI will, in theory, offer better governance because algorithms provide consistent decision making; robots don’t suffer from conflicts of interest (reducing agency costs); they can process vast quantities of data and work long hours. They could also reduce litigation costs and counter groupthink. Skills and expertise can just be programmed in.

That all sounds attractive. But given pressure on business to apply “stakeholder” governance and societal values, it might be more difficult than it seems for robots to consider the fine balances needed in decision making (we’ve all seen the movies).

That said, Mertens points out Delaware case law already has a potential duty of AI delegation though he anticipates a “duty” cropping up elsewhere only when “data analysis is at the basis of a decision”.

Code of law

Even if a robot were permitted to be a director, there would be a question of how to resolve big legal issues, such as where liability resides for an AI decision. The board or the programmers?

Key legal questions still need to be resolved, which may require the regulation of “corporate purposes” for AI, embedding “rule-compliant behaviour” into code and a thorough going policy debate over the liability question.

Others have argued elsewhere that relatively “minor” changes in the law could clear the way for boardroom robots, while some note the introduction of AI would also need a change in company constitutions.

It’s also worth bearing in mind whether board directors are ready to work alongside AI. A recent survey by Brunel University found that directors feel there is “insufficient” work underway on AI ethics. That echoes previous assertions that AI presents new “ethical risks” and suggests board directors may not have a lot of faith in the current state of the technology to produce consistent and reliable decisions.

Martens says we may never see actual AI as board directors, but it may be a useful tool for board members. He also has a warning. A review of the law is “needed” as an important first step and to ensure “that novel corporate rules are not dictated by the quickly evolving AI technology, but based on calm reasoning”. And that, it has to be said, would be human reasoning. Good luck everybody.